Artificial intelligence aims to imitate the cognitive abilities of a human being, perhaps hoping to include one day a concept considered specific to humans or at least to the most common animal species. evolved:consciousness. Still difficult to define, consciousness divides the scientific community on the possibility of integrating it into machines. Indeed, “artificial” and “consciousness” constitute a strong paradox, impossible to reconcile for some. Where are we on the technological advances in this area?

From the outset, the problem posed is accompanied by the fundamental question of the definition of consciousness. It literally means “to know (scientia) with (cum)”, which suggests the idea of an accompaniment, a knowledge which is with oneself and which would therefore be specific to Man. Consciousness would be the ability to separate from oneself to "represent" oneself, and would be accompanied by a body and a morality, which tends to exclude robots and other artificial intelligences from its possible acquisition.

The famous "I think therefore I am by Descartes seems to follow this same anthropocentric principle. In reality, the definition of consciousness remains imprecise and it is, according to André Comte-Sponville, "one of the most difficult words to define ". A consciousness that attempts to define itself thus poses a problem for some experts.

Alan Turing and John von Neumann (the founders of modern computational science) envisioned the possibility that machines would eventually mimic all of the brain's abilities, including consciousness. Daniel Dennett, of Tufts University (Massachusetts), considers that the Turing test is enough to prove this possibility, if it is carried out “with the appropriate vigor, aggressiveness and intelligence”. As a reminder, in the Turing test, a machine must convince a human interrogator that it is conscious.

To find out if machines can one day be endowed with consciousness, it is first necessary to examine how it appears in the human brain. Last year, three neuroscientists delved into the subject by suggesting that the word "consciousness" encompasses two different types of information-processing calculations in the brain:selecting information for global dissemination, making it thus flexibly available for calculation and reporting (C1, consciousness in the first sense), and self-monitoring of these calculations, which leads to a subjective feeling of certainty or error (C2, consciousness in the second sense) .

C1 corresponds to the transitive sense of consciousness and refers to the relationship between a cognitive system and a specific object of thought, for example a mental representation of "light". Conscious information is always present, available for later. The C2 level of consciousness goes even further, since it is a question of self-control, a reflexive consciousness specific to humans. “The cognitive system is able to monitor its own processes and obtain information about itself “, write the authors. “Human beings know a great deal about themselves, including information as diverse as the disposition and position of their body, whether they know or perceive something, or whether they have just made a mistake . This feeling of awareness corresponds to what is commonly called introspection, or what psychologists call 'metacognition', i.e. the ability to conceive and use internal representations of one's own knowledge. and capabilities .

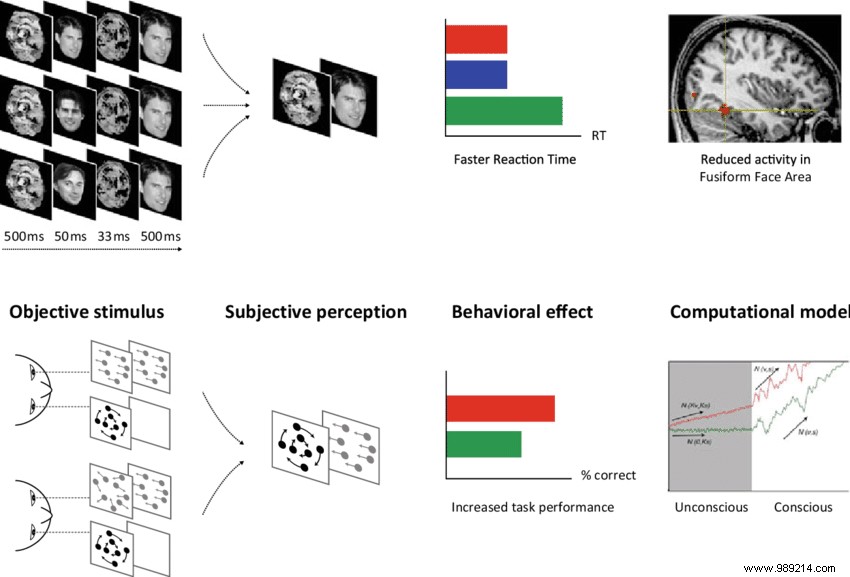

Cognitive neuroscientists then developed various ways to present images or sounds without inducing conscious experience, then used behavioral and brain imaging to probe the depth of their processing. Neuroimaging methods reveal that the vast majority of brain areas can be activated unconsciously, for example during invariant face recognition. Thus, the processing of a person's face is facilitated when preceded by the subliminal presentation of a totally different view of the same person, indicating unconscious invariant recognition (level C0).

In fact, the conscious system (C1+C2) would be necessary to constitute a "strong" artificial intelligence:self-learning, able to process any intellectual work and self-evaluating. On the contrary, according to neuroscientists, most current machine learning systems lack self-control:they calculate without taking into account the extent and limits of their knowledge, or the fact that others may have a point of view different from theirs. "We argue that despite their recent successes, current machines still primarily implement computations that mirror unconscious (C0) processing in the human brain conclude the three researchers.

To date, the majority of scientists agree that there is a "weak" artificial intelligence and that answering the question in the affirmative simply involves another form of consciousness.

However, even if there is still a gap between the functioning of our brain and that of an algorithm, it is clear that the refinements of machine learning (inspired by neurobiology) have led to artificial neural networks that surpass sometimes human. Advances in computer hardware and learning algorithms now allow these networks to handle complex problems (e.g., machine translation) with very good success rates. Would they be at the dawn of consciousness?

“Artificial consciousness can be advanced by studying the architectures that allow the human brain to generate consciousness, then transferring that knowledge into computer algorithms », continue the three researchers. So some aspects of cognition and the neuroscience of consciousness may just be machine-relevant, yet not conjure up "consciousness".

There is no shortage of examples of advances in the field of artificial intelligence. Thus, a recent architecture, PathNet, uses a genetic algorithm to learn which path, through its many specialized neural networks, is the most suitable for a given task. This architecture exhibits robust and flexible performance, as well as generalization across tasks, and could be a first step towards primate-like flexibility of consciousness.

At the end of 2021, Chinese scientists developed an artificial intelligence that can perform certain tasks usually performed by a prosecutor. 97% reliable (insufficient for some experts), it would recognize and lay charges for eight of the most common crimes committed in Shanghai.

Even more recently, OpenAI co-founder and chief scientific officer Ilya Sutskever said today's large neural networks could be "mildly self-aware." A year ago, he said, "You're going to see much smarter systems in 10 or 15 years, and I think it's very likely that these systems will have a completely astronomical impact on society .

Therefore, beware of the catastrophic slippages that could result from a massive development of artificial intelligence. Indeed, any powerful tool can be used for good or bad reasons. In the worst case, it could "take over" the human - much like the human did with the animal - which would make us almost obsolete.

Even if scientific and technological advances could envisage it, artificial intelligence is not close to possessing a conscience in the sense that we understand it:an entity capable of reflecting on itself, of including our emotions in the rational decision-making, etc. 7 million years of human brain evolution could hardly be replaced by algorithms, no matter how elaborate. However, another form of "consciousness", more and more evolved, could well see the light of day.